Sommaire

How false information spreads on twitter during an attack situation? The case of Nice

Some time ago, I published a research article in the journal Le Temps des Médias with Camille Alloing on the production of digital rumors. It was time to address this with a more educational approach. The goal was to see how rumors are digitally manufactured and whether we could discover a model.

Key findings

Vous retrouverez l'analyse et la méthodologie plus bas dans l'article, mais voici les principaux enseignements :

1- False information needs novelty to survive. We observe changes and alterations in false information that allow it to persist until disproof occurs.

2- False information is more often echoed than produced. Only 9% of tweets are direct propagation of the false information.

3- False information is shared by young people and ecosystems far from traditional media and institutions. Young people mainly share the rumors, and those who spread them do not follow media. Only 2.3% of people who propagated the rumor followed the Ministry of the Interior's account, compared to 5.3% who disproved the rumor.

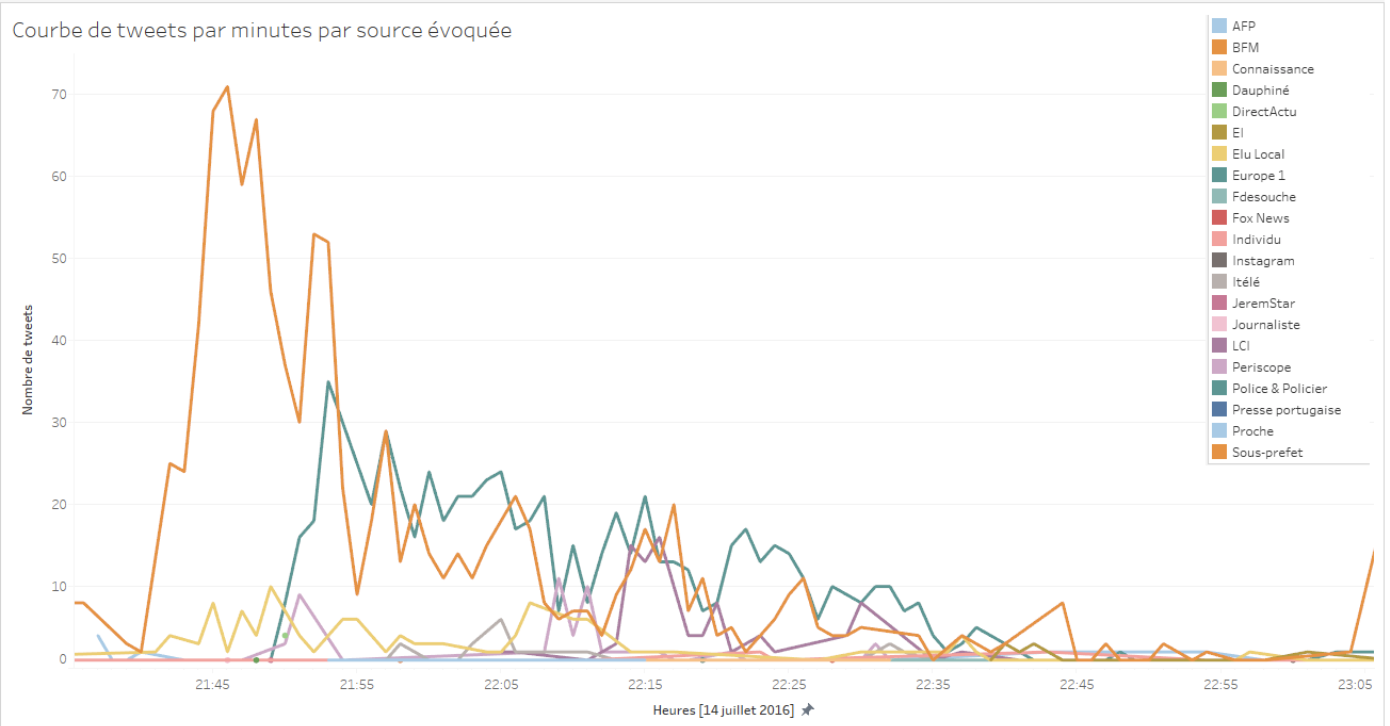

4- False information is attributed to the media. Authoritative sources such as BFM, a local official, the police, or LCI attract more attention than on-site individuals or those attributing the rumor to someone close.

5- Official disproof kills false information and is more visible. Disproof is far more visible, primarily produced by authorities.

6- The importance of secondary sources in spreading false information during attacks. Primary witnesses are more reliable, whereas secondary witnesses often misinterpret events.

7- False information during attacks is shared by people who are not present..

Analysis

1. False information needs novelty to survive

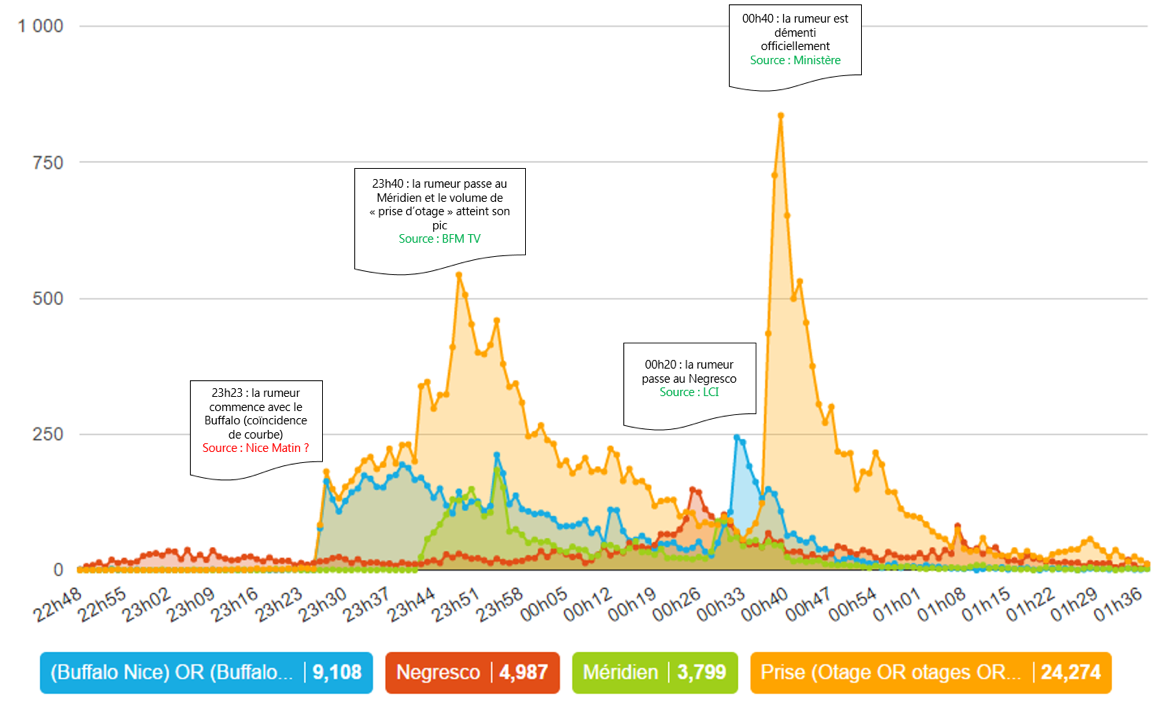

By analyzing the succession of events, it is noticeable that the locations of hostage-taking fluctuate from one place to another. Thus, the rumor starts with Buffalo Grill, moves on to the Méridien, and ends at the Negresco before it is completely debunked.

2. False information is more echoed than produced

When looking at the distribution of different typologies, it becomes evident that productions are mainly due to attribution and refutation. The rumor is ultimately only produced at 9.4%. In the end, hearsay is what ensures the rumor is seen and consulted.

| Type of message | Mechanism | Exemple | Volume in % |

|---|---|---|---|

| Rumor | Rumors are relayed without any conditional or attribution | "Hostage-taking at the Méridien. #nice#attentatsNice" | 9,4 % |

| Attribution | Rumors are relayed with attribution | "BFM mentions the possibility of a hostage-taking by the murderers at the Méridien on the Promenade des Anglais in #Nice" | 23,6 % |

| Comments | Rumors are relayed only to comment on them | "The terrorists are holding a hostage at Buffalo Grill even though it's not even halal" | 9,1 % |

| Questioning | Rumors are relayed in the form of a question | "Is there a hostage-taking at the Méridien hotel?" | 2,8 % |

| Warning | Rumors are relayed in the form of a question | "Stay home... hostage-taking at the Méridien." | 1,4 % |

| Over-Specification | Additional elements are added | An assailant really barricaded himself in Buffalo Grill in Nice, he was 'neutralized,' and he had a handgun on him." | 0,6 % |

| Conspiracy | Rumors fit into a "conspiratorial" vision | "And why are they hiding the two hostage-takings on TV? #bfm #Nice" | 0,1 % |

| Justification | The profile that relayed the rumor justifies itself later | "To clarify, I wasn't talking about a hostage-taking, but a barricaded man—that's different. But now isn't the time for controversy." | 0,1 % |

| Critique | Profiles relaying the rumor are urged not to do so | I haven't seen any info about a hostage-taking. It's best to wait for official information to avoid foolishness." | 3,6 % |

| Doubt | Rumors are relayed or refuted, but doubt about their truthfulness is expressed | "#Nice No confirmation of a hostage-taking, but stay off the streets." | 4,5 % |

| Refutation | Rumors are refuted | "No, I just called Buffalo Grill in Nice—they didn't have anything except a crowd movement :)" | 44,8 % |

3. False information is shared by young people and ecosystems far from traditional media and institutions

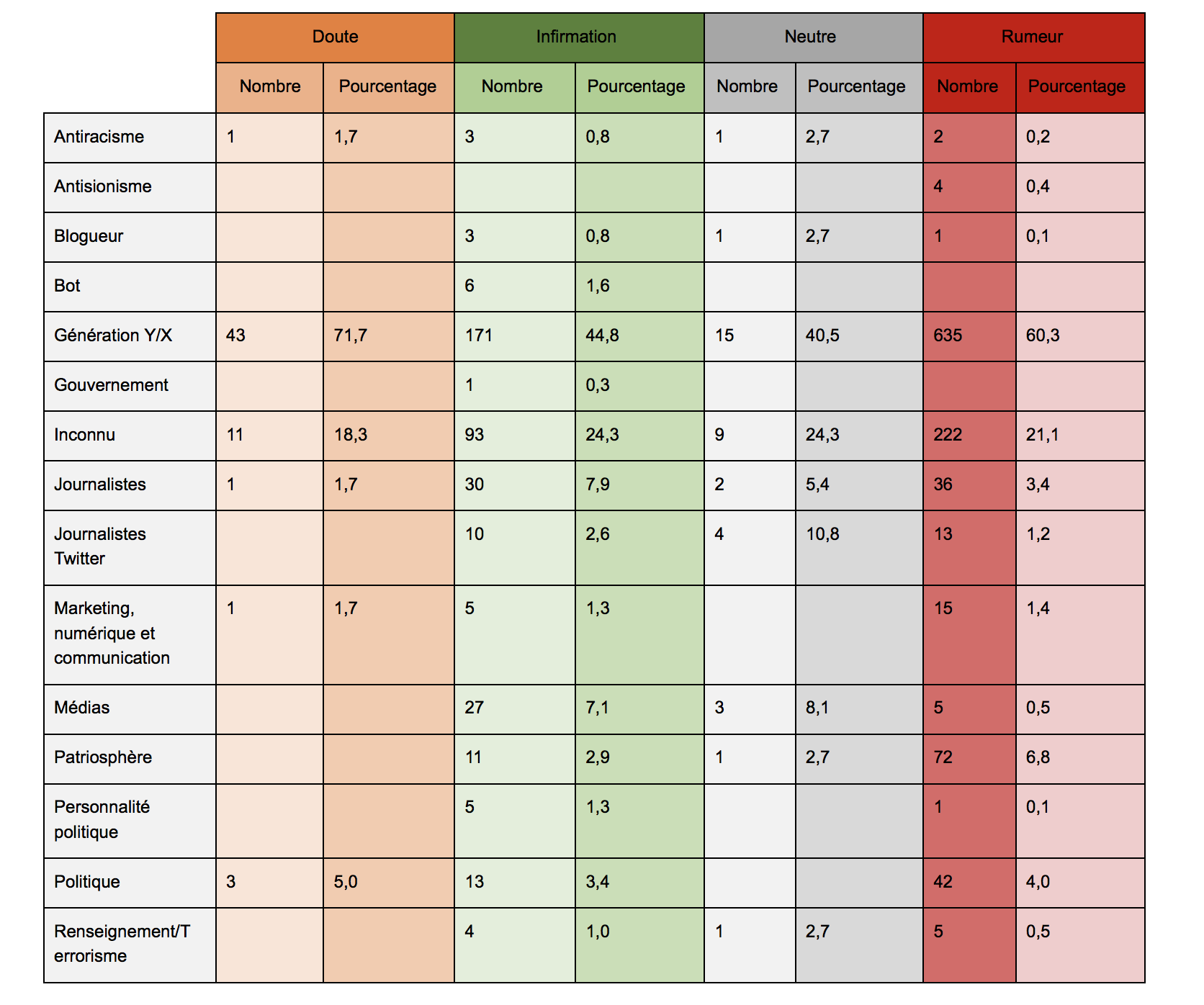

The distribution shows that people from Generation X/Y and actors of the patriosphere are particularly involved in creating rumors, while they are significantly less involved in creating refutations.

When comparing the statistics between rumor actors and refutation actors, we notice that those involved in rumors have significantly lower audiences than those involved in refutation. The potential audience, based solely on followers, is four times higher for refutation. Rumor actors are also more active.

| Rumor | Refutation | |

|---|---|---|

| Total likes | 54.343.373 | 57.521 |

| Total followed | 5.122.014 | 5.695.325 |

| Total followers | 9.296.852 | 41.904.637 |

| Total tweets | 192.322.235 | 217.649.27 |

| Average likes | 9.234 | 7.464 |

| Average followed | 870 | 739 |

| Average followers | 1.580 | 5.437 |

| Average tweets | 32.680 | 28.240 |

| Median likes | 2.684 | 2.076 |

| Median de followed | 386 | 380 |

| Median followers | 466 | 375 |

| Median tweets | 15.099 | 11.285 |

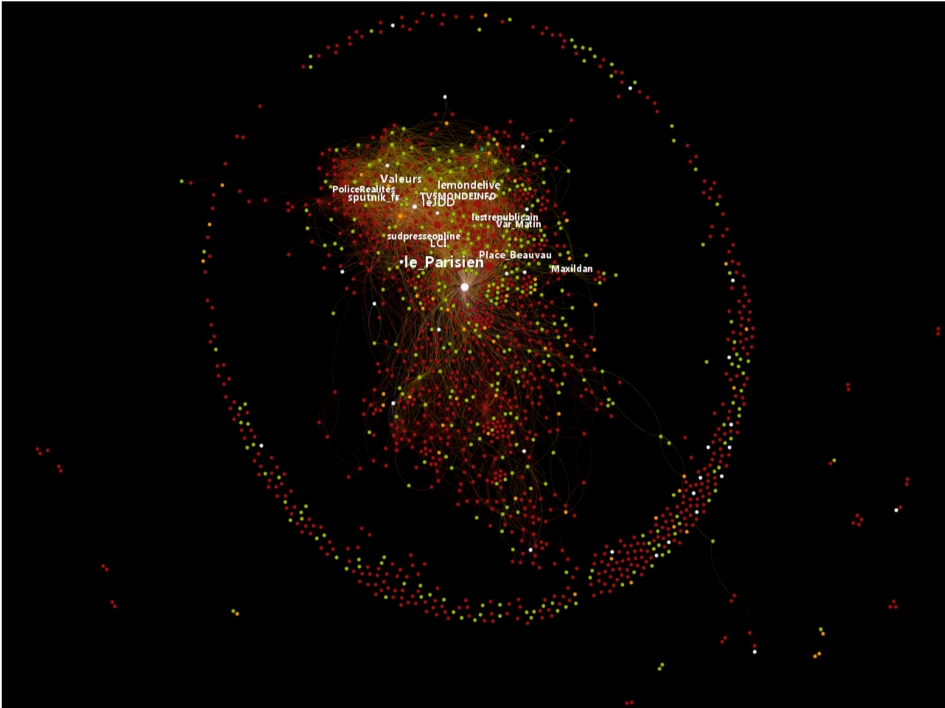

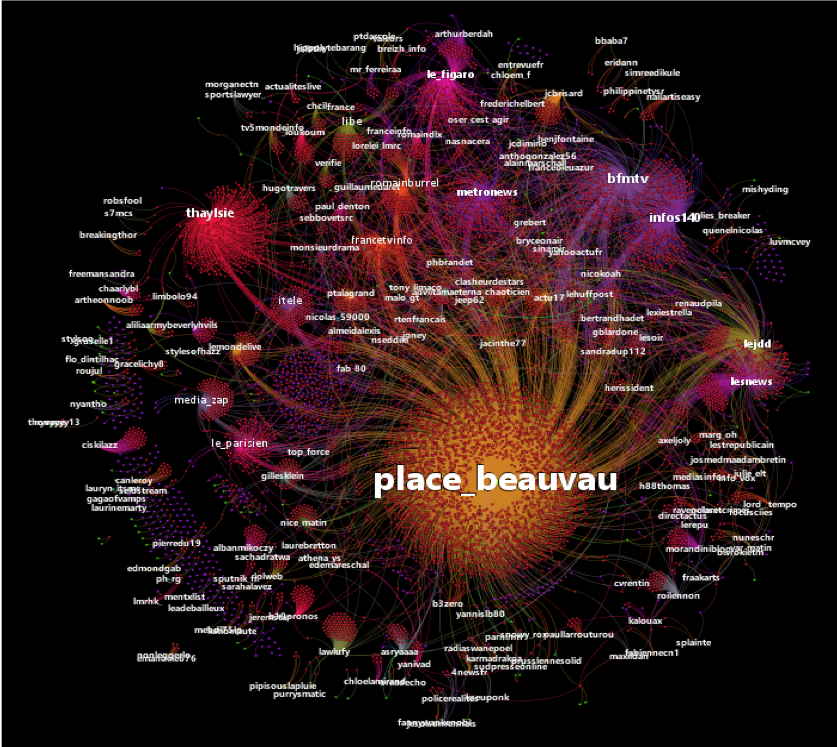

When looking at the relationship between them and whether they shared false information (red), doubt (orange), or information (green):

We see that many people who tweeted about the false information in Nice neither follow nor are followed by any other individuals who also tweeted about the rumors in Nice. This shows that during the attacks, people either go beyond their own timeline to get information from other sources or receive the rumor through retweets.

Another lesson is that the creation of refutation is mainly done by people who are close to the media community and by those who are not isolated in terms of relationships. In contrast, people who share false information do not follow the media.

Finally, only 2.3% of people who spread the rumor follow the Ministry of Interior's account, compared to 5.3% of those who refuted the rumor, showing that they do not follow institutions either.

4. False information is attributed to the media

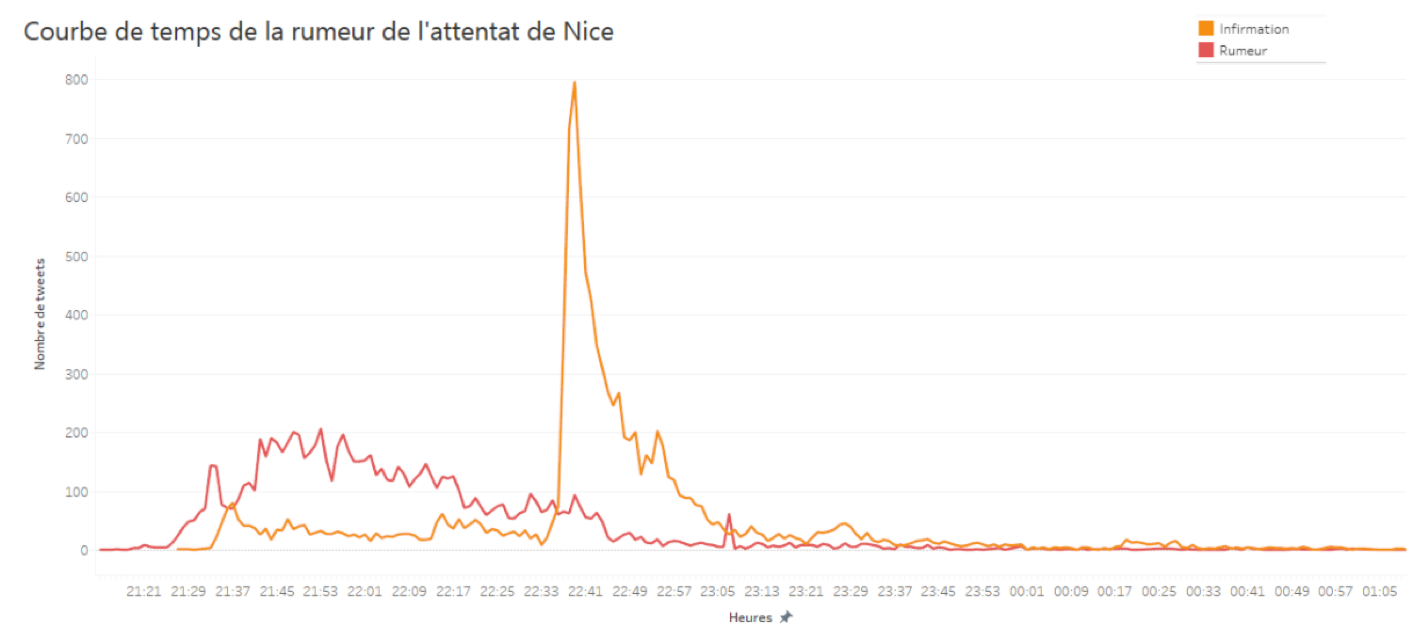

When observing the attribution curve without retweets, we notice a total lack of consistency. Once retweets are added, we get the following curve:

We notice that authoritative sources such as BFM, a local official, the police, or LCI are those that attract more attention, more so than people present on-site or people who attribute the rumor to a relative.

5. Authorities' refutation kills false information and is more visible than false information

In terms of production, refutation surpasses false information:

Moreover, it is far less visible in terms of audience, as the authors' analysis has shown. Additionally, refutation almost instantly kills false information:

Furthermore, when analyzing information dissemination networks, we see the central and significant role played by authorities:

6. The importance of secondary sources in disseminating false information during the attacks

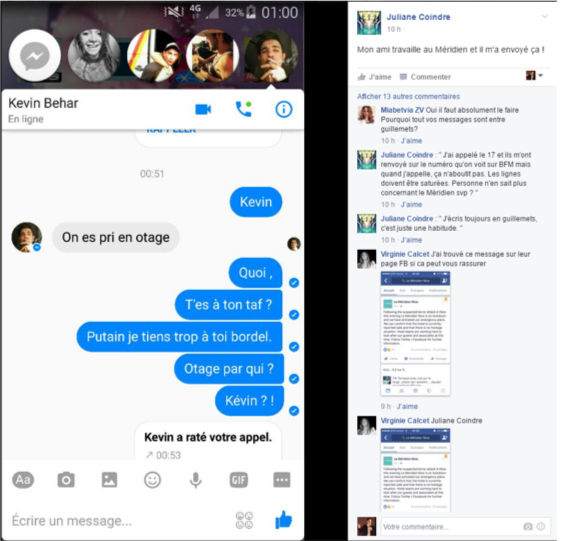

Generally speaking, people sharing false information during attacks do not do so with vile intentions. In reality, most of the time, we observe mechanisms of verification and evidence coming from real witnesses but who are in fact secondary sources.

Thus, very often, there is this type of sharing from real people or witnesses who see real things but interpret them incorrectly. Like in the case of the hostage-takings in Nice, where people seeing police officers in front of buildings with people locked inside interpret this as a hostage-taking. These people outside are secondary witnesses, whereas the people inside the building are primary witnesses.

To explain the mechanism, let’s take the example of a widely shared post about an Ikea piece of furniture. A user showing a piece of furniture named "Javel" suggests that Ikea is mocking people since the translation of the word in Swedish is "jerk." Most people, to verify the information, go on Google Translate and see that indeed Jävel means jerk. Confirmed in their verification, they hurry to share it. However, the real verification is not on Google Translate, but rather to check if the furniture is really named Jävel, which it is not! The piece is actually called Orrberg. The mechanism during the attacks is the same: people think the person is on-site and thus trust them. However, they are not direct but indirect witnesses.

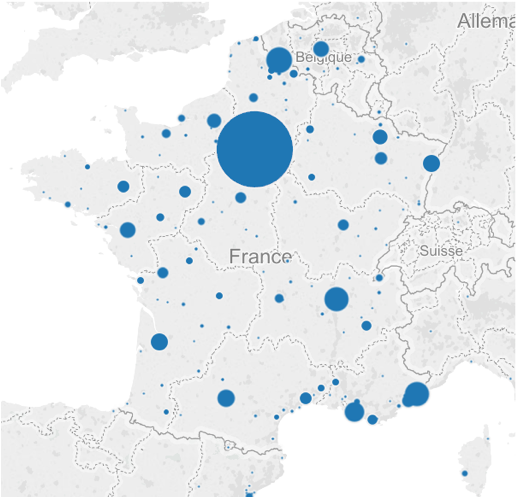

False information during the attacks is shared by people who are not on-site

It may not surprise anyone, but false information during the attacks is spread by many people who are not on-site:

Methodology

The analysis conducted by Camille Alloing and myself focused on three rumors that circulated on the Twitter platform (and beyond) during the July 14, 2016, attack in Nice: three hostage-takings were said to have taken place at the Negresco and Méridien hotels, as well as at the Buffalo Grill restaurant in the city center.

Using Twitter's "public" API, we collected messages (tweets) containing the names of these hotels and the restaurant, as well as the terms "hostage" and "taking."

The exact query was: "((Buffalo Grill) OR (Buffalo Nice) OR Negresco OR Méridien OR (Prise AND (otage OR Otage OR otages OR Otages)))".

We collected 23,323 messages, which we then categorized based on their nature: original message, repost (retweet), and response to a message.

| Tweets | Retweets | Replies | Total | |

|---|---|---|---|---|

| Number | 1 962 | 20 072 | 1 289 | 23 323 |

| Percentage | 8,4 % | 86,1 % | 5,5 % |

Qualification manuelle des publications

I then manually qualified each of the tweets related to these false hostage-taking claims. We categorized the different messages as follows:

Rumor: The message conveys the rumor.

Example: "HOSTAGE TAKING AT MERIDIEN HOTEL IN NICE"

Refutation: The message refutes the rumor.

Example: "No hostage situation at Méridien in #Nice. My mom took shelter there and is safe. The police are outside."

No: The message is unrelated to the rumor but pertains to the attack. Messages unrelated to the attack were deleted.

Example: "1/3 Following the alleged terrorist attack tonight in Nice, Le Méridien Nice has been placed in lockdown."

We then categorized the messages using a second typology. For the rumor, it could be:

Rumor: The rumor is repeated without any conditional phrasing or attribution.

Example: "Hostage taking at Méridien. #nice #attacksNice"

Repetition: The rumor is repeated with conditional phrasing or attribution.

Example: "BFM mentions the possibility of a hostage situation by the attackers at the Méridien on the Promenade des Anglais in #Nice"

Comment: The rumor is only mentioned to comment on it.

Example: "The terrorists are taking hostages at Buffalo Grill and it’s not even halal"

Questioning: The rumor is presented in the form of a question.

Example: "Is there a hostage-taking at the Méridien hotel? #bfm"

Warning: The rumor is mentioned to warn people

Example: "Stay at home....hostage situation at Meridien."

Addition: An addition has been made to the rumor. Example: "@lawlufy One assailant did take shelter in the Buffalo Grill in Nice, he was 'neutralized' and had a handgun on him."

Conspiracy: The rumor is part of a conspiracy. "Something is being hidden from us."

Example: "And why are they hiding the two hostage situations on TV? #bfm #Nice"

Defense: The person who repeated the rumor later defends themselves.

Example: "@MorganCine note that I wasn't talking about a hostage-taking but a man taking shelter; it’s different. But now is not the time for controversy."

For the refutation, it could be:

Doubt: The rumor is questioned.

Example: "@Toyan66 Méridien? judging by the TV images, the cameras are filming more towards Palais and Negresco, so they are in front of the Méridien @rumeursduweb"

Critique: The repetition of the rumor is criticized.

Example: "Twitter has become BFM TV 2.0, yuck, terrorism, attack, hostage-taking, where do you get this from?"

Refutation: The rumor is refuted.

Example: "#Nice No hostage-taking (@PHBrandet spokesperson ministry)"

Author analysis

To analyze the authors, we only examined authors of original tweets.

However, establishing an author typology on Twitter is complex because the factors that could qualify an author are multiple (demographics, interests, occupation, profession, etc.).

This complexity hampers the exclusivity principle of coding required in content analysis. For this reason, we decided to prioritize the criteria for qualifying a profile:

- Profession

- Occupation

- Interest

After examining the corpus, our categorization was developed around the following codes:

Professions

Bot: The profile is a "bot," an automatic or semi-automatic device that interacts with the platform as a human would. These profiles automatically distribute content from websites or predefined lists.

Government or Institutional Accounts: The profile is managed by government authorities.

Unknown: Based on the available information on the public profile, it was not possible to categorize this actor in any other category.

Journalists: The profile identifies as a journalist.

Twitter Journalists: The profile is that of a "Twitter journalist," i.e., a continuous information account only present on Twitter, presenting itself as providing information in 140 characters.

Marketing, Digital, and Communication: The profile belongs to someone working in or having a strong interest in marketing, communication, or digital topics.

Media: The profile belongs to a media organization.

Intelligence/Terrorism: The profile belongs to a consultant in the field of intelligence and/or terrorism.

Political Figure: The profile belongs to a political figure holding an office.

Occupation

Blogger: The profile is that of a blogger

Interest

Anti-racism: The profile identifies itself as anti-racist, and the shared content confirms this categorization. Anti-racism communities reject all forms of discrimination.

Anti-Zionism: The profile identifies itself as anti-Zionist, with content primarily opposing the state of Israel.

Generation Y/X: In our categorization of actors, we also identified a majority of accounts whose biography highlights interests or references that could be linked to adolescence (video games, reality TV, school references, etc.), profile pictures representing young people (estimated to be between 15 and 25 years old), etc. In short, a fairly homogeneous self-presentation that could refer to Generation X and Y.

Patriosphere: The profile belongs to communities called the "patriosphere," also known as the "fachosphere" or "reacosphere." The difference between the concepts of fachosphere/reacosphere and patriosphere is that these communities directly identify themselves as part of the patriosphere. This community is composed of traditionalist Catholics, neo-fascist activists, anti-leftists, or Islamophobes.

Politics: The profile belongs to someone with a strong interest in politics, evidenced by an overrepresentation of this theme in the account's editorial activity.

Study limitations

Exhaustiveness

The sample of tweets was retrieved through Twitter's public API, so it is not exhaustive. The representativeness of the public API has not been extensively studied in the scientific literature, except for the difference between Twitter's Streaming API and Twitter's private API. We compared the volume of tweets retrieved by NoDexl and the volume of tweets available via GNIP (official Twitter tweet provider) and can assure that we have at least 90% of the tweets.

Temporal analysis

The analysis of the authors was conducted in December 2016. Many profiles had disappeared by then (changed username, account banned by Twitter, or deleted account). Thus, of the 1,779 authors of original tweets, only 1,532 were still available.

Country analysis of authors

The analysis of countries is biased because many international actors' tweets were considered in our study, as they tweeted about the various hostage locations (Méridien, Buffalo Grill, Negresco). However, refutations of the rumor were not considered, as we only retrieved hostage-taking tweets in French. Therefore, it is possible that they refuted the rumor using "Hostage taking," but this was not considered in our study.