Many journalists are currently questioning social media analysis following the Yellow Vests phenomenon, particularly since the publication of a

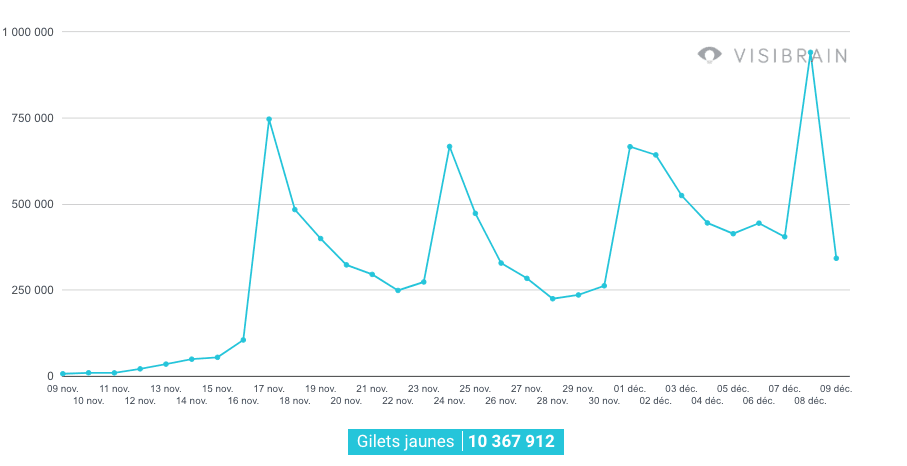

Times article featuring an analysis by a cybersecurity firm. Naturally, we are at 10 million tweets in a month. The volume has now exceeded what is possible to analyze at a simple scale (API, processors, etc.).

This volume, like that of the Benalla affair, exceeds all previously observed volumes. The Paris attacks generated 1 million tweets, London 2 million globally, Berlin 300,000, Nice 1.5 million globally, and "Balance Ton Porc" in France 450,000 over five months. This sudden increase in volume since the summer raises questions:

- s it a demographic evolution of Twitter? (More active users?)

- A change in usage? (More intense activism?)

- Political tension? (A convergence of movements against Macron?)

- Another explanation?

A. Barriers to analysis

Currently, analyzing this phenomenon takes longer than the speed at which information circulates, and faces several obstacles:

- Researchers are paid and evaluated based on their propensity to publish scientific articles. Current affairs analysis is not part of their mission, despite the vague label of "service to society."

- Article publication is more oriented towards theory-building rather than describing reality. This means that researchers must relate their analysis of current events to existing theories (attention economy, click workers, etc.).

- Researchers must build their teams quickly to capture data promptly. Facing a torrent of 10 million tweets, they need to go beyond quantitative analysis to avoid clichés. Therefore, analysis alone is impossible; it requires time and people.

- Additionally, the fact that the data is only available on Twitter and not on other social networks is also an inherent bias. There has been a migration of influence behaviors from Twitter to Facebook, which is logical since Facebook is a "wild west" where only Facebook is the sheriff. Initially, it was easier for troll factories to establish themselves on a public open social network than on a private one. The use of private groups has meant that this is no longer the case, and Twitter is now just the tip of the iceberg.

B. Indicators used to establish interference and their biases

The issue of possible foreign interference arises next. These are extremely difficult to prove. A pseudonym remains a pseudonym, and current methods are very far from the lessons of hard sciences, where the goal is to prove reality beyond doubt. Researchers are forced to look for indicators. These indicators have all the weaknesses of traditional indicators, as they can be manipulated or explained by other variables. For instance, indicators from other sciences such as GDP, unemployment rate, happiness index, average salary (considered irrelevant and replaced by median salary), etc., show that finding an unbiased indicator is very complex. An indicator is merely an indication, not a value in itself. Currently, the indicators used in foreign interference studies are:

- Degree of Production: Much scientific literature points to sockpuppet and botting when a certain level of activity is observed, generally between 800 and 1,200 tweets per day (almost a tweet per minute without sleeping). The assumption is that if there is a troll factory, it must operate through series of activities lasting 8-hour shifts or more. However, among these ultra-active authors (thanks to the Benalla affair), there are also many unemployed individuals who are highly politically motivated and retweet a lot. This indicator is, therefore, merely a sign of contamination and is subject to many biases. Some also criticize taking retweets into account, though this criticism is not well-supported, as it is easier for troll factories to retweet than to create original messages.

- Comparison with a Pro-Russian Ecosystem: Another method is to compare the ultra-active accounts to a reference pro-Russian ecosystem. This method is based on the assumption that if there is troll farming, it also serves to push media with a pro-Russian lens (Sputnik, RT). This method has provided good indicators for identifying disinformation networks but suffers from a major bias: correlation is not causation. It is logical for disinformation to target power, and that counter-power exerted by these media is linked together. Thus, while it can be a good starting indicator or method for initial monitoring, it cannot prove Russian interference.

- Comparison with a Database of Previous Disinformation: Building a database of previous disinformation to identify new cases has been a successful method but has a significant problem—the rapid destruction of the database due to Twitter account suspensions. Furthermore, in some disinformation operations (e.g., claims of Emmanuel Macron being funded by Saudi Arabia or the use of Mediapart/L'Express blogs to suggest a "Macron Cahuzac" connection), the networks used for propagation were created for the occasion and thus could not be found in the databases.

C. Research challenges

The indicators, therefore, have weaknesses and biases inherent to their methods. Additionally, research in this area is blocked by the fact that:

- All of these indicators use individual databases. The reactions to the Disinfolab case last summer showed how sensitive this is, as it involves a societal choice between a surveillance society and a society of freedom. The introduction of GDPR makes analyzing these cases much more difficult, though not prohibited. Researchers are just in a complete gray area between Twitter's terms of use declaring all data public and sensitive data legislation.

- These studies revolve around highly political debates where it is almost impossible to participate without receiving a barrage of comments from people who do not even read the content but immediately consider it propaganda or a disguised government operation.

- If there are many signs of interference, their impact is uncertain, and the narrative that fake news led to Trump's election or Brexit is unsupported. Generally, the fake news debate is a huge axis of diplomatic influence between the Atlantic bloc and the Russian/Chinese bloc, with each accusing the other (e.g., the Yellow Vests between the U.S. and Russia). The debate vacillates between the public sphere and the intelligence community, as if the state is aware of external manipulation attempts, it cannot use its intelligence services to provide proof in the public sphere. It thus seeks public publications to ensure visibility. Studies are all politically repurposed, often with huge oversimplifications. In the Disinfolab case, where a comparison with a reference ecosystem deemed Russophile was interpreted as "Russian bots," the distortion was striking.

- Moreover, the chicken-and-egg debate remains. If simple messages on social media can work, it is because there are real flaws being exploited..

The conclusion is that the issue can hardly be addressed by combating disinformation through analysis and investigation alone. Most symposia and conferences on the topic conclude that analysis and study alone are insufficient. Two solutions have emerged:

fact-checking (driven by a media lobby that saw an opportunity to recover a series of budgets) and

media literacy.The first solution views individuals as lost and troubled by disinformation, who need an antidote. However, most studies on disinformation show that those spreading it are completely disconnected from the media ecosystem, so we end up with people seeing the disinformation but never the debunking, while those seeing the debunking never saw the disinformation in the first place.The second solution, media literacy, takes so much time that it seems distant compared to the current issue.Furthermore, there are almost no experts on these issues or on specific content niches. Those with the technical and theoretical background to conduct such studies are quickly recruited by different organizations and thus no longer have a public voice. The experts brought together to address these issues are, therefore, people who take advantage of the debate's visibility to advance themselves or are invited to share their expertise in a field related to fake news (filter bubbles, algorithms, social networks, etc.). I will forever remember a hearing before a Belgian minister, where an expert told me afterward, "Anyway, I don't care; it's just that this matter consumes energy." In short, we are in complete confusion since no question has an answer.In the current state of affairs, it is impossible to prove Russian interference based on the indicators at our disposal. Only GAFA (Google, Apple, Facebook, Amazon) and investigative journalists have the keys.

This volume, like that of the Benalla affair, exceeds all previously observed volumes. The Paris attacks generated 1 million tweets, London 2 million globally, Berlin 300,000, Nice 1.5 million globally, and "Balance Ton Porc" in France 450,000 over five months. This sudden increase in volume since the summer raises questions:

This volume, like that of the Benalla affair, exceeds all previously observed volumes. The Paris attacks generated 1 million tweets, London 2 million globally, Berlin 300,000, Nice 1.5 million globally, and "Balance Ton Porc" in France 450,000 over five months. This sudden increase in volume since the summer raises questions: